NEXT STORY

Impact factors

RELATED STORIES

NEXT STORY

Impact factors

RELATED STORIES

|

Views | Duration | |

|---|---|---|---|

| 31. Handing in my dissertation | 70 | 05:55 | |

| 32. A proposal for the Genetic Citation Index | 57 | 03:00 | |

| 33. The indexing project | 80 | 04:03 | |

| 34. Financial problems for the Scientific Citation Index | 70 | 06:03 | |

| 35. Problems starting The Scientist | 65 | 06:33 | |

| 36. The ups and downs of the business and the office atmosphere | 57 | 01:16 | |

| 37. The impact of scientific information systems on scientific... | 68 | 05:02 | |

| 38. Impact factors | 111 | 05:33 | |

| 39. Historiographs | 60 | 04:28 | |

| 40. Usefulness of citations for historians | 1 | 59 | 03:45 |

What's the value, the impact of scientific information systems on the progress of science. How do you demonstrate that? I said that it's similar to the difficulty of measuring the economic impact of basic research. It's, it's a truism, everybody believes in it, but proving is something else, you know. When you're a chemist, you're trained to use literature, right? They take it for granted that your time is well spent if you spend time in the library. Wasn't always true that way, you know. There were times in the past, especially in Germany and other countries, a scientist could, had to have permission to go into a library. He couldn't be wasting time out of a lab; they wanted him to work in the lab. As though every minute of the day spent in the lab could be, you know, productive. I guess you could watch the bugs multiply faster. But, I can't... anyhow, it turns out that there are people apparently now who are trying to, in terms of science policy analysis, to demonstrate the value of information systems. I mean, we, we take it for granted that the marketplace determines that. If it wasn't so important to industry and academia why would they be spending all these billions of dollars in paying for information systems, you know there seems to be some value there.

[Q] It was important to scientists' careers to have the citation index. I mean...

Well, it's important now because using citation, frequencies and measurements have begun to be incorporated into the, the evaluation process, the tenure process, the research assessment exercises and so forth. But it wasn't always that way. I mean, in the past they just relied on subjective opinions and that's a, that's a, you know, I keep getting confronted with this idea that I'm responsible for a Frankenstein, you know, that we created this thing and there are a lot of people who hate us for it.

[Q] You're being imitated, you're being imitated by all these other players.

Yeah, but I mean, still you get all these, what's the word, critical remarks and dig out the latest versions of... editorials by people who are so upset by the fact that numbers are being used to evaluate people, as though they were never used before. And as though peer review was a far better reliable, you know, subjective views are more reliable, maybe in some cases they are better. Somebody has an intuition about somebody, somebody who has got high numbers, but you think he's a jerk and other people who have low numbers, you think they're brilliant. I mean, that's a personal opinion. But what's the collective opinion? You did that study on the collagen thing which was a classic which shows that in most fields anyhow you probably find that 95% of the time, the people that turn out to be the most respected are the ones with the highest citation counts or certainly among the top five or ten, right?

[Q] They'll be perceived as the leaders in the field.

Well, all these snide remarks that people make about citation analysis, I always tell them at the meetings, I never encountered a Nobel class scientist who hadn't published a citation classic. You know, you look up their records and in fact, more than one, several, frequently. And if they haven't there's always a very good explanation for it, that in fact, you could then use the citation history to trace the reason why there was no citation recognition, it was a long-term delayed recognition. So, on the one hand people are always citing examples of delayed recognition of which there are very few, by the way. But Wolfgang and I did a paper in Scientometrics a couple of years ago, we did that study of how rarely you can find them yet people have this impression that it's a widespread phenomena and therefore you can't use citation analysis frequently. If it was very common the you wouldn't want to rely on it but it's really such a rare thing.

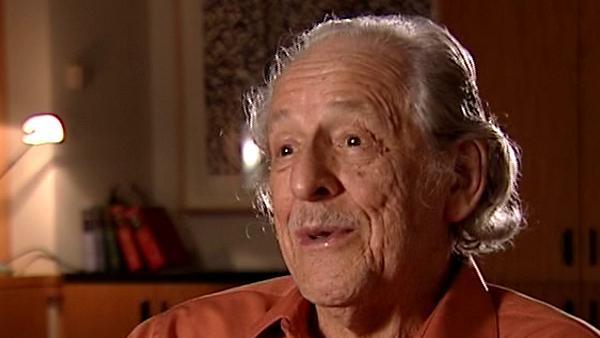

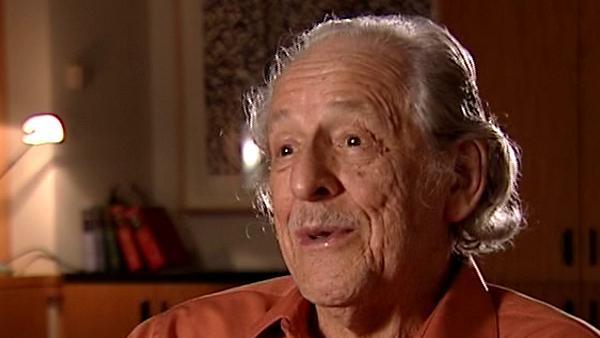

Eugene Garfield (1925-2017) was an American scientist and publisher. In 1960 Garfield set up the Institute for Scientific Information which produced, among many other things, the Science Citation Index and fulfilled his dream of a multidisciplinary citation index. The impact of this is incalculable: without Garfield’s pioneering work, the field of scientometrics would have a very different landscape, and the study of scholarly communication would be considerably poorer.

Title: The impact of scientific information systems on scientific progress

Listeners: Henry Small

Henry Small is currently serving part-time as a research scientist at Thomson Reuters. He was formerly the director of research services and chief scientist. He received a joint PhD in chemistry and the history of science from the University of Wisconsin. He began his career as a historian of science at the American Institute of Physics' Center for History and Philosophy of Physics where he served as interim director until joining ISI (now Thomson Reuters) in 1972. He has published over 100 papers and book chapters on topics in citation analysis and the mapping of science. Dr Small is a Fellow of the American Association for the Advancement of Science, an Honorary Fellow of the National Federation of Abstracting and Information Services, and past president of the International Society for Scientometrics and Infometrics. His current research interests include the use of co-citation contexts to understand the nature of inter-disciplinary versus intra-disciplinary science as revealed by science mapping.

Duration: 5 minutes, 2 seconds

Date story recorded: September 2007

Date story went live: 23 June 2009