NEXT STORY

The complexity of human intelligence

RELATED STORIES

NEXT STORY

The complexity of human intelligence

RELATED STORIES

|

Views | Duration | |

|---|---|---|---|

| 181. AI and why I built the Connection Machine | 100 | 03:24 | |

| 182. The complexity of human intelligence | 103 | 05:03 | |

| 183. Recreating evolution inside a computer | 1 | 80 | 03:38 |

| 184. Nature – the great engineer | 1 | 91 | 02:44 |

| 185. Morphogenesis as an adaptive process | 76 | 03:01 | |

| 186. The two-dimensional landscape of evolution | 75 | 03:51 | |

| 187. Evolving an intelligence with the use of computers | 70 | 00:59 | |

| 188. Programming an intelligence for solving complex problems | 68 | 03:36 | |

| 189. How to create an intelligence | 69 | 05:11 | |

| 190. My interest in proteomics | 83 | 02:50 |

There have always been kind of two schools of thought in artificial intelligence. One of them is kind of the emergent intelligent idea, of, for instance, neural networks. The idea that you'd have some system that was a fundamentally simple system of replicated parts that had in it the inherent ability to organise. And that by some method of interaction with the world or training or something like that, that would form into something that could do intelligence. So neural networks are the perfect example of that. They have some structure, they have layers, they have not complete connectivity, but you're able to train them, for instance, on pictures and have them determine different categories of things. And so that idea was a very early idea in artificial intelligence. And in fact, Marvin [Minsky] worked first on that idea. And he built little electronic neurons out of tubes, and that's what he did when he was a Harvard fellow. The problem with that idea was that it really needed a lot of neurons, and so you had to get up to very, very large numbers before you got that kind of emergence happening. And so while in principle people had very good ideas about how to train the networks and so on, going back to McCulloch and Pitts, and people like Jerry Lettvin and Marvin Ashby. You know, they definitely had notions of how to do this. They, in practice, couldn't do it very much.

So part of why I built the Connection Machine was to try to get the number of neurons up much higher so that you could do those kinds of things. Now, as it turns out, even though I increased it maybe by a factor of 10,000 of what you could do before, I was still another factor of a thousand or a million off of what was actually required. So in fact, recently people have built big enough machines so that they've actually gotten enough to get this emergent behaviour. They've got enough data to train it on it. And in fact, it works very well, pretty much with exactly the algorithms that people imagined in the 1950s and 1960s. It just required more neurons.

So that certainly works, and something like that is certainly a part of intelligence. So that's the sort of... I would say, the kind of mysterious subconscious intelligence of... certainly recognition seems to work like that. And that's the kind of intelligence that takes lots and lots of weak information and puts it together and somehow comes to a conclusion, like I'm looking at George Dyson right now, from lots of little tiny clues.

So that kind of intelligence is very easy for us. We do it without much effort, but it's probably actually most of the computation that goes on in our minds. And it's probably the kind of intelligence that we have in common with animals.

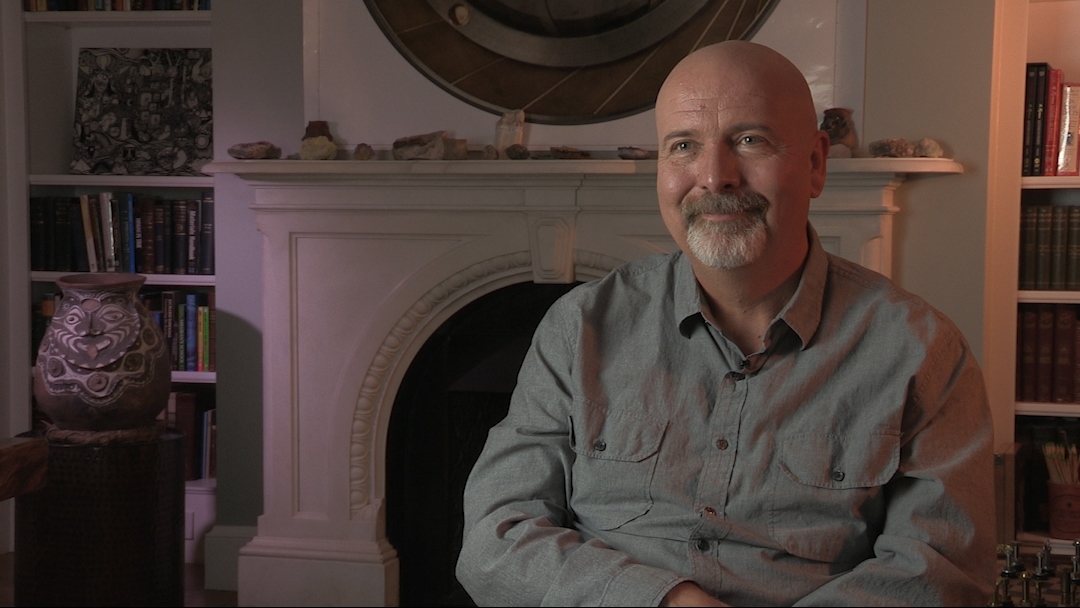

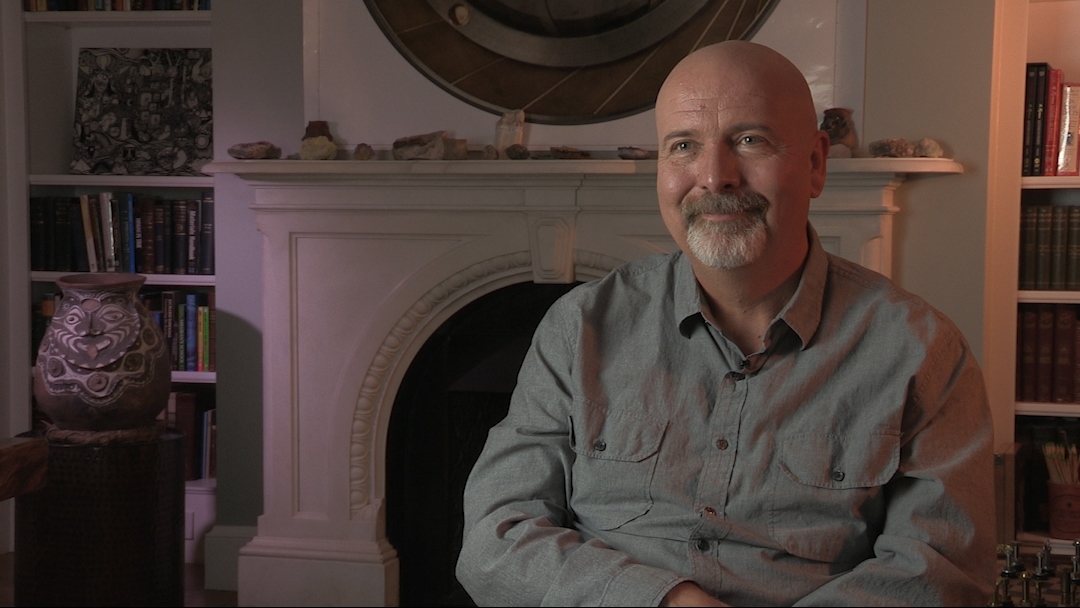

W Daniel Hillis (b. 1956) is an American inventor, scientist, author and engineer. While doing his doctoral work at MIT under artificial intelligence pioneer, Marvin Minsky, he invented the concept of parallel computers, that is now the basis for most supercomputers. He also co-founded the famous parallel computing company, Thinking Machines, in 1983 which marked a new era in computing. In 1996, Hillis left MIT for California, where he spent time leading Disney’s Imagineers. He developed new technologies and business strategies for Disney's theme parks, television, motion pictures, Internet and consumer product businesses. More recently, Hillis co-founded an engineering and design company, Applied Minds, and several start-ups, among them Applied Proteomics in San Diego, MetaWeb Technologies (acquired by Google) in San Francisco, and his current passion, Applied Invention in Cambridge, MA, which 'partners with clients to create innovative products and services'. He holds over 100 US patents, covering parallel computers, disk arrays, forgery prevention methods, and various electronic and mechanical devices (including a 10,000-year mechanical clock), and has recently moved into working on problems in medicine. In recognition of his work Hillis has won many awards, including the Dan David Prize.

Title: AI and why I built the Connection Machine

Listeners: Christopher Sykes George Dyson

Christopher Sykes is an independent documentary producer who has made a number of films about science and scientists for BBC TV, Channel Four, and PBS.

Tags: Marvin Minsky

Duration: 3 minutes, 24 seconds

Date story recorded: October 2016

Date story went live: 05 July 2017