NEXT STORY

Programming is not what it used to be

RELATED STORIES

NEXT STORY

Programming is not what it used to be

RELATED STORIES

|

Views | Duration | |

|---|---|---|---|

| 211. Getting to steer a nuclear submarine | 67 | 02:22 | |

| 212. Connection Machine and MapReduce paradigm | 72 | 03:00 | |

| 213. Programming is not what it used to be | 93 | 04:32 | |

| 214. Biology and technology become increasingly alike | 67 | 03:10 | |

| 215. Age of Enlightenment versus Age of Entanglement | 110 | 01:12 | |

| 216. The Entanglement needs a different paradigm | 92 | 03:06 | |

| 217. My life list: Climbing the Great Pyramid | 71 | 05:18 | |

| 218. My life list: Flying a helicopter | 60 | 02:08 | |

| 219. My life list: Northern Lights | 72 | 01:50 | |

| 220. My life list: Ise Shrine | 62 | 03:25 |

When we first started programming the Connection Machine, we figured out actually what was wrong with Amdahl's Law, which is Amdahl's Law always assumed that you broke up the program so that different parts of the program ran on different parts of the computer. And of course there's only so many ways to break up a task, and you can't break it up in an even way. But the more interesting way to program the Connection Machine was to break up the data and kind of do the same thing to all the data. So we worked out this program paradigm, which we called Data Parallel Programming to contrast it with Controlled Parallel Programming, which is breaking up the program. And basically it's now what's called MapReduce. The basic idea is that you have lots of pieces of data in lots of different processors. So the first operation you do is you do a Map operation. You apply the same function to every piece of data. And then what you... so it might be say a filter function. So let's say that I'm trying to count the number of things that are greater than five. So the first function is: I map the test if it's greater than five across all the pieces of data. Now I want to count them, that's a reduction. So somehow you bring all that information together into one number. And so I might add them up, I might count them, I might average them. And so, actually, because we knew we wanted a program and I designed the Connection Machine so that it had special hardware for doing those two functions... It had special hardware for mapping a program across everything, so you could broadcast out the same program to everything. And then it had a special network for reducing. So that was built into the hardware of the Connection Machine, MapReduce. It was basically a MapReduce machine. And in my thesis, I called that map and reduce Alpha and Beta, that you could... basically, you'd reduce over any associative function, like add or average. And you could map any function. You could just repeat it on each of the data elements. And so that paradigm for programming, MapReduce, is actually used... actually it got popularised, probably through Sergey Brin, who was a Connection Machine programmer. Google used it to do their machines, even when they went on and didn't use Connection Machines, they still used that MapReduce paradigm and then some people that spun out of Google created this thing called Hadoop, and so now that's basically the way an awful lot of parallel programming is done. But it's all kind of derived from the actual hardware of the way that the Connection Machine worked.

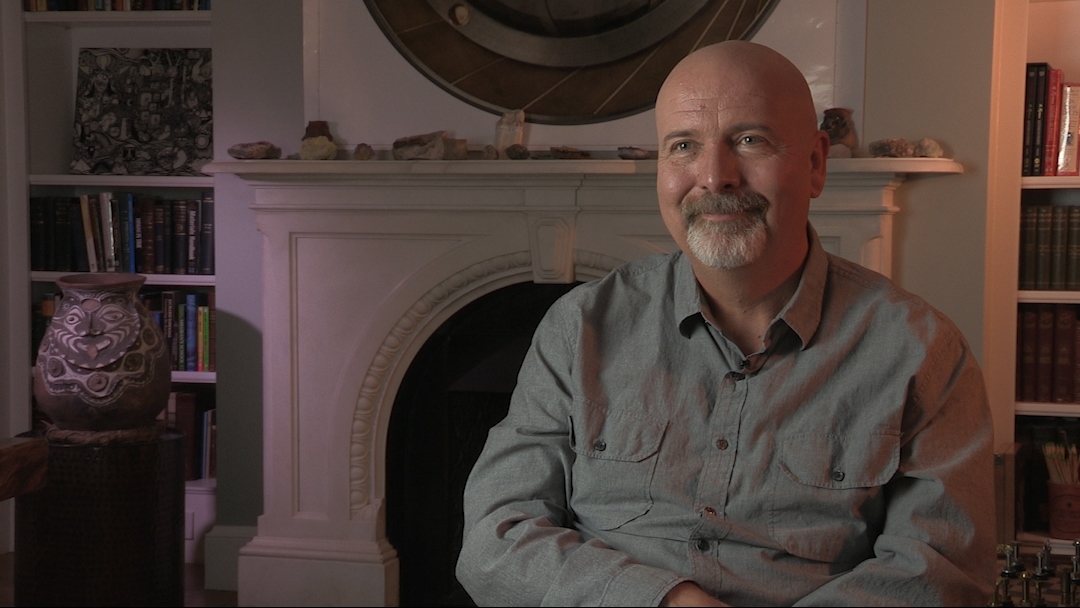

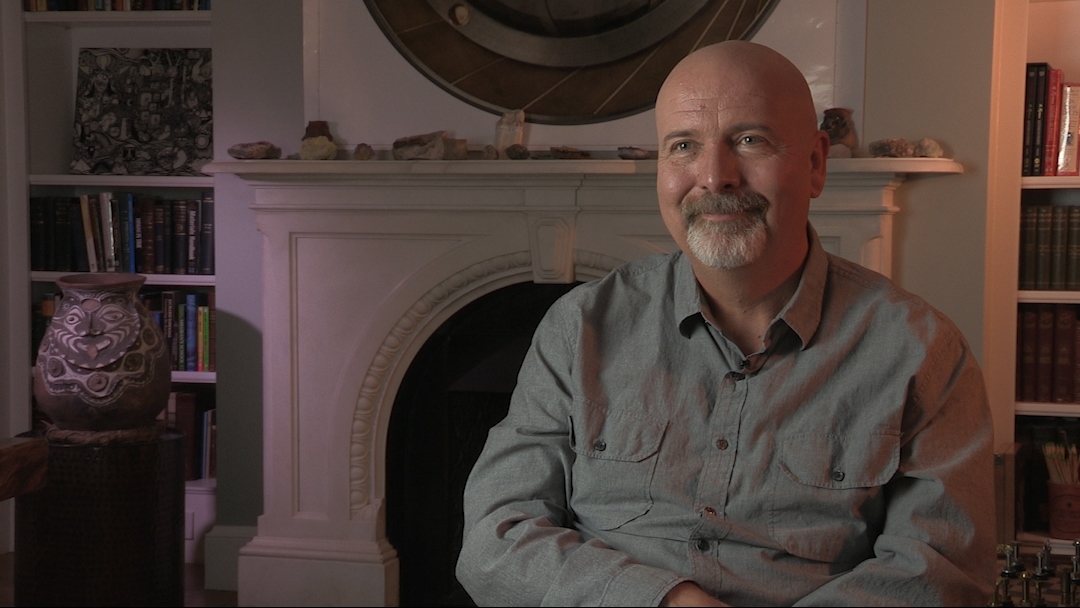

W Daniel Hillis (b. 1956) is an American inventor, scientist, author and engineer. While doing his doctoral work at MIT under artificial intelligence pioneer, Marvin Minsky, he invented the concept of parallel computers, that is now the basis for most supercomputers. He also co-founded the famous parallel computing company, Thinking Machines, in 1983 which marked a new era in computing. In 1996, Hillis left MIT for California, where he spent time leading Disney’s Imagineers. He developed new technologies and business strategies for Disney's theme parks, television, motion pictures, Internet and consumer product businesses. More recently, Hillis co-founded an engineering and design company, Applied Minds, and several start-ups, among them Applied Proteomics in San Diego, MetaWeb Technologies (acquired by Google) in San Francisco, and his current passion, Applied Invention in Cambridge, MA, which 'partners with clients to create innovative products and services'. He holds over 100 US patents, covering parallel computers, disk arrays, forgery prevention methods, and various electronic and mechanical devices (including a 10,000-year mechanical clock), and has recently moved into working on problems in medicine. In recognition of his work Hillis has won many awards, including the Dan David Prize.

Title: Connection Machine and MapReduce paradigm

Listeners: Christopher Sykes George Dyson

Christopher Sykes is an independent documentary producer who has made a number of films about science and scientists for BBC TV, Channel Four, and PBS.

Tags: Sergey Brin

Duration: 3 minutes

Date story recorded: October 2016

Date story went live: 05 July 2017