NEXT STORY

How I became interested in neural networks

RELATED STORIES

NEXT STORY

How I became interested in neural networks

RELATED STORIES

|

Views | Duration | |

|---|---|---|---|

| 21. A short history of neural networks | 1579 | 02:30 | |

| 22. How I became interested in neural networks | 1 | 1322 | 01:53 |

| 23. My early methods for testing neural networks | 1129 | 02:10 | |

| 24. My PhD thesis on learning machines | 1273 | 01:06 | |

| 25. John Nash solves my PhD problem | 1945 | 01:48 | |

| 26. Why I changed from bottom-up to top-down thinking | 2 | 2650 | 02:39 |

| 27. The end of my PhD on learning machines | 1271 | 04:24 | |

| 28. My first encounter with a computer | 1 | 1088 | 01:56 |

| 29. Writing a program for Russell Kirsch's SEAC | 970 | 01:54 | |

| 30. The first timeshared computer | 938 | 01:03 |

There had been some theories of what neural networks could… could do, and the answer was that… neural networks are like logic in the sense that you make… you can make little boxes that can compute simple properties of… of input signals and represent them in their output in some way, like one neuron will recognize whether two inputs come at approximately the same time, and say yes, both, and so that corresponds to saying that for certain two statements are true at the same time, something like that.

So there’s a rule… and another kind of neuron will produce an output if either input is excited, and so that's… that’s called an or, and then there’s a not where you’ll have a neuron that will produce outputs unless a signal comes in, and then will turn it off. And it turns out with those three kinds of things you can build any kind of more complicated machine you can think of. And so that was sort of known rather early by Russell and Whitehead and people like that around the turn of the… beginning of the 20th century.

So when I’m going to school in the 1950s a lot has been known about logic and simple machines for 50 years, but then in the 1930s and '50s a lot more was learned about machines by Alan Turing who invented the modern theory of computers in the… 1936 was his outstanding paper, and Claude Shannon who invented the… a great theory of the amount of information carried by signals in… published I think just about 1950; he had also invented logical theories before that. And Shannon became a close friend of ours fairly early in 1952 in fact.

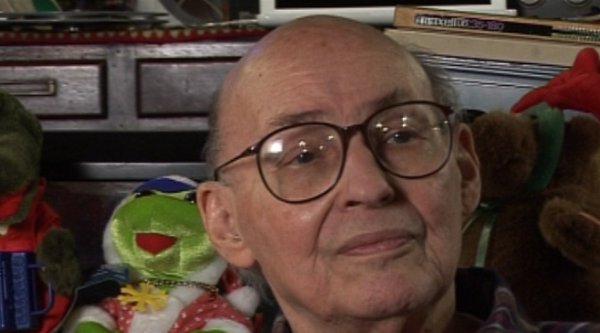

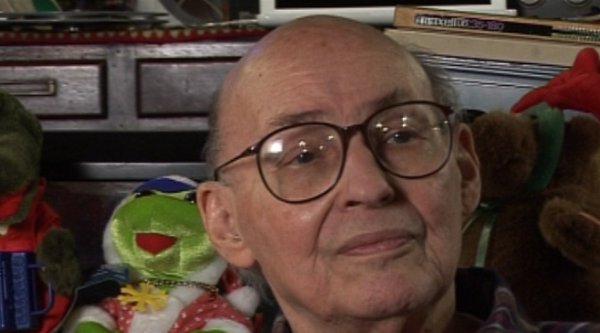

Marvin Minsky (1927-2016) was one of the pioneers of the field of Artificial Intelligence, founding the MIT AI lab in 1970. He also made many contributions to the fields of mathematics, cognitive psychology, robotics, optics and computational linguistics. Since the 1950s, he had been attempting to define and explain human cognition, the ideas of which can be found in his two books, The Emotion Machine and The Society of Mind. His many inventions include the first confocal scanning microscope, the first neural network simulator (SNARC) and the first LOGO 'turtle'.

Title: A short history of neural networks

Listeners: Christopher Sykes

Christopher Sykes is a London-based television producer and director who has made a number of documentary films for BBC TV, Channel 4 and PBS.

Tags: 1950s, 1930s, 1936, 1952, Bertrand Russell, Alfred North Whitehead, Claude Shannon, Alan Turing

Duration: 2 minutes, 31 seconds

Date story recorded: 29-31 Jan 2011

Date story went live: 09 May 2011