NEXT STORY

The end of my PhD on learning machines

RELATED STORIES

NEXT STORY

The end of my PhD on learning machines

RELATED STORIES

|

Views | Duration | |

|---|---|---|---|

| 21. A short history of neural networks | 1581 | 02:30 | |

| 22. How I became interested in neural networks | 1 | 1323 | 01:53 |

| 23. My early methods for testing neural networks | 1130 | 02:10 | |

| 24. My PhD thesis on learning machines | 1273 | 01:06 | |

| 25. John Nash solves my PhD problem | 1946 | 01:48 | |

| 26. Why I changed from bottom-up to top-down thinking | 2 | 2650 | 02:39 |

| 27. The end of my PhD on learning machines | 1271 | 04:24 | |

| 28. My first encounter with a computer | 1 | 1089 | 01:56 |

| 29. Writing a program for Russell Kirsch's SEAC | 970 | 01:54 | |

| 30. The first timeshared computer | 938 | 01:03 |

I was interested in that... trying to design a machine that would collect a lot of experience and then make predictions about what was likely to happen next, and so the... this thesis ends up with that, a little bit theory about that... but then I decided that that was really the wrong way... or a wrong way to, think about thinking. And... I think in 1956, or was it 54? I’ll have to look it up again... another friend of mine named Ray Solomonoff started to produce a theory of how to predict... make predictions from experience based on a completely different set of ideas based on probability theory and, more important, based on Alan Turing’s idea about universal machines; and Solomonoff’s idea was that basically the best way to make a prediction is to find the simplest Turing machine that will produce a certain phenomenon.

Now, there’s no systematic way known where... and in fact it may be impossible to find the simplest Turing machine that produces a certain result, but still this is a wonderfully important idea about the foundations of probability and about how one could build very intelligent machines. So after I got this insight from Solomonoff, I stopped working on neural nets entirely because I felt that was a bottom-up approach, and one really couldn’t design a machine to do intelligent things unless one had some sort of top-down theory of what it is that… what kind of behaviour that machine should have.

Once you have a clear idea of what it is to be a smart machine, then you can start to figure out, well then, what kind of machinery could support that, and what kind of machinery would it take to do that? And then at the end of a rather long path we could ask, and how could we use neurons or things like we find... like the cells we find in the brain, to produce these functions?

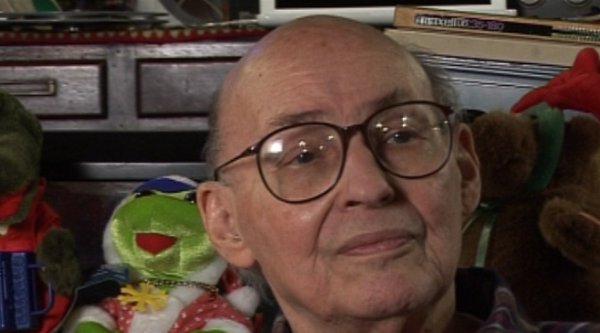

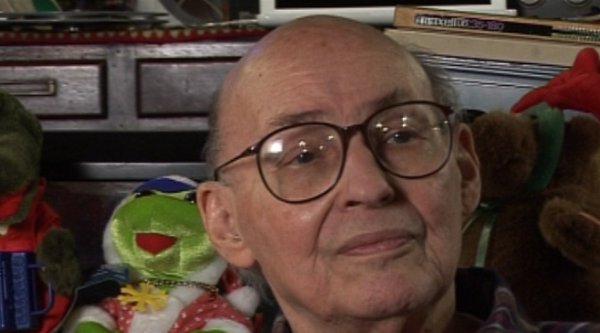

Marvin Minsky (1927-2016) was one of the pioneers of the field of Artificial Intelligence, founding the MIT AI lab in 1970. He also made many contributions to the fields of mathematics, cognitive psychology, robotics, optics and computational linguistics. Since the 1950s, he had been attempting to define and explain human cognition, the ideas of which can be found in his two books, The Emotion Machine and The Society of Mind. His many inventions include the first confocal scanning microscope, the first neural network simulator (SNARC) and the first LOGO 'turtle'.

Title: Why I changed from bottom-up to top-down thinking

Listeners: Christopher Sykes

Christopher Sykes is a London-based television producer and director who has made a number of documentary films for BBC TV, Channel 4 and PBS.

Tags: Ray Solomonoff, Alan Turing

Duration: 2 minutes, 40 seconds

Date story recorded: 29-31 Jan 2011

Date story went live: 09 May 2011