NEXT STORY

My PhD thesis on learning machines

RELATED STORIES

NEXT STORY

My PhD thesis on learning machines

RELATED STORIES

|

Views | Duration | |

|---|---|---|---|

| 21. A short history of neural networks | 1579 | 02:30 | |

| 22. How I became interested in neural networks | 1 | 1322 | 01:53 |

| 23. My early methods for testing neural networks | 1129 | 02:10 | |

| 24. My PhD thesis on learning machines | 1273 | 01:06 | |

| 25. John Nash solves my PhD problem | 1945 | 01:48 | |

| 26. Why I changed from bottom-up to top-down thinking | 2 | 2650 | 02:39 |

| 27. The end of my PhD on learning machines | 1271 | 04:24 | |

| 28. My first encounter with a computer | 1 | 1088 | 01:56 |

| 29. Writing a program for Russell Kirsch's SEAC | 970 | 01:54 | |

| 30. The first timeshared computer | 938 | 01:03 |

I don’t remember clearly when I got interested in psychology, but I’m sure it was related to accidentally discovering this book called Mathematical Biophysics by Nicholas Rashevsky who had published a journal... for many years, and one of the important papers in his journal was the paper by McCulloch and Pitts on logical... how... how logical modules, little ands... devices that computed ands and ors and nots, could compute more complicated functions of stimuli and produce responses and so forth.

So that was a general theory, and general theories are often easier to construct than specific theories, so this said how you could make any machine, but it didn’t give details. And I got more interested in, how can I make a particular machine that would learn from experience and do some of the things that intelligent animals can do? So I worked out some ideas about how one kind of neural network could generate different possibilities. The simplest idea would be to try things at random, but that’s rather uninteresting in some ways, it’s much harder to experiment with, and another alternative is to systematically generate different possibilities, and then that could be more efficient because you don’t waste time duplicating things, and on the other hand it might be inefficient, because it makes too many meaningless... explores too many meaningless possibilities.

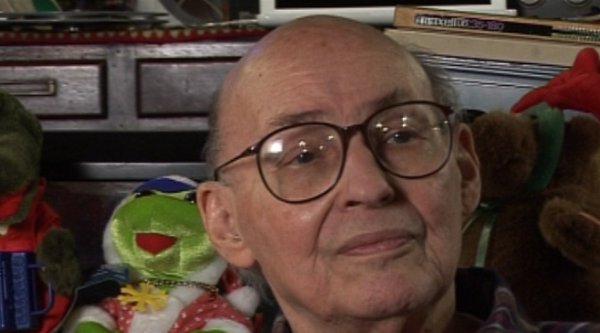

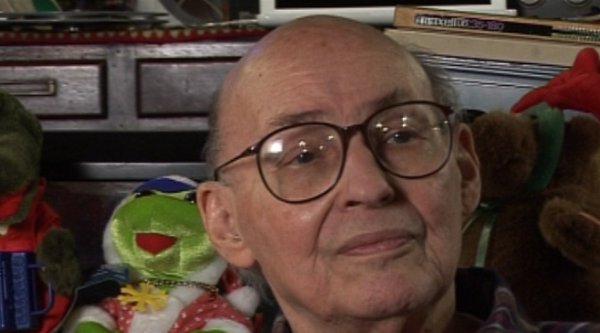

Marvin Minsky (1927-2016) was one of the pioneers of the field of Artificial Intelligence, founding the MIT AI lab in 1970. He also made many contributions to the fields of mathematics, cognitive psychology, robotics, optics and computational linguistics. Since the 1950s, he had been attempting to define and explain human cognition, the ideas of which can be found in his two books, The Emotion Machine and The Society of Mind. His many inventions include the first confocal scanning microscope, the first neural network simulator (SNARC) and the first LOGO 'turtle'.

Title: My early methods for testing neural networks

Listeners: Christopher Sykes

Christopher Sykes is a London-based television producer and director who has made a number of documentary films for BBC TV, Channel 4 and PBS.

Tags: Mathematical Biophysics, Nicolas Rashevsky, Warren McCulloch, Walter Pitts

Duration: 2 minutes, 11 seconds

Date story recorded: 29-31 Jan 2011

Date story went live: 09 May 2011