NEXT STORY

Learning machine theories after SNARC

RELATED STORIES

NEXT STORY

Learning machine theories after SNARC

RELATED STORIES

|

Views | Duration | |

|---|---|---|---|

| 131. The work of BF Skinner | 1219 | 02:22 | |

| 132. My friendship with BF Skinner | 1161 | 02:58 | |

| 133. The cleverest rat | 5 | 1080 | 03:10 |

| 134. Activating a dead crayfish claw | 982 | 04:20 | |

| 135. My endless sequence of lucky breaks | 1015 | 00:40 | |

| 136. Building my randomly wired neural network machine | 1861 | 02:25 | |

| 137. Show and tell: My neural network machine | 1 | 1827 | 03:21 |

| 138. Learning machine theories after SNARC | 1143 | 02:09 | |

| 139. My mistake when inventing the confocal microscope | 1252 | 01:11 | |

| 140. Nicholas Negroponte's lab: The Architecture Machine | 1039 | 02:00 |

We designed this thing and there were... the machine was a rack of 40 of these. So it was about as big as a grand piano and full of racks of equipment. And here is a machine that has a memory, which is the probability that if a signal comes into one of these inputs, another signal will come out the output. And the probability that will happen goes from zero – if this volume control is turned down – to one if it’s turned all the way up. And then, there’s a certain probability that the signal will get through. If the signal gets through, this capacitor remembers that for a few seconds. So that’s... that's the short term memory of the neuron. And then, if you reward the thing for what it’s done... there are 40 neurons, maybe 20 of them conducted impulses and something happened which you liked, typically. Then, you would press a button to reward the... this animal, which is the size of a grand piano. And a big motor starts and there... there’s a chain that goes to all 40 of these potentiometers. And... but in between that, there’s a magnetic clutch. So, if this conduct... if this neuron actually transmitted an impulse and this capacitor remembers it, then this clutch will be engaged. And when the big chain moves through all of these things, then the ones that have recently fired will... will move a little bit. And the amount that it moves will depend on how long ago it fired because the charge on this capacitor is time dependent and... once you’ve charged the capacitor, the current goes through this resistor and... and drains out. So, this is a little short term memory of what recently happened and this is the long term memory. So, I could make a simulated rat out of 40 of these. It had a big plug board and you just wire them all in random... this thing connected to that and so forth. And it could learn. It could learn and we built a maze which was a copy of a maze that Shannon had built. Shannon built a machine that learnt to run through a maze not using a nervous system, but using relays. And I sort of copied that and this one also could learn slowly. But it... it learnt some things and it couldn’t learn some other things. And no sooner was the machine finished and we ran some experiments with it then I concluded that this theory wasn’t good enough.

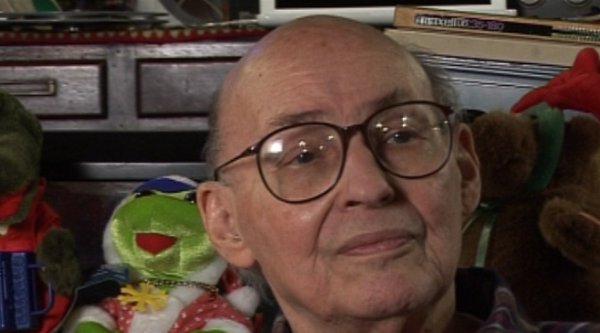

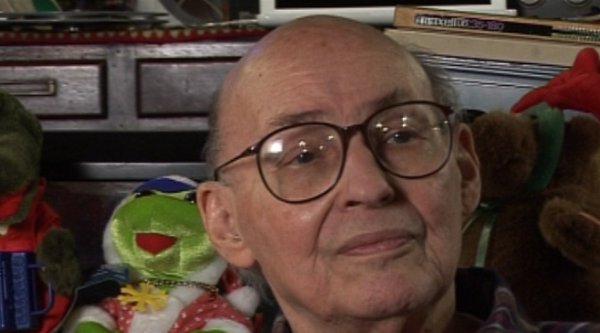

Marvin Minsky (1927-2016) was one of the pioneers of the field of Artificial Intelligence, founding the MIT AI lab in 1970. He also made many contributions to the fields of mathematics, cognitive psychology, robotics, optics and computational linguistics. Since the 1950s, he had been attempting to define and explain human cognition, the ideas of which can be found in his two books, The Emotion Machine and The Society of Mind. His many inventions include the first confocal scanning microscope, the first neural network simulator (SNARC) and the first LOGO 'turtle'.

Title: Show and tell: My neural network machine

Listeners: Christopher Sykes

Christopher Sykes is a London-based television producer and director who has made a number of documentary films for BBC TV, Channel 4 and PBS.

Tags: Claude Shannon

Duration: 3 minutes, 22 seconds

Date story recorded: 29-31 Jan 2011

Date story went live: 13 May 2011