NEXT STORY

An example of a cost-free signal in Drosophila subobscura

RELATED STORIES

NEXT STORY

An example of a cost-free signal in Drosophila subobscura

RELATED STORIES

|

Views | Duration | |

|---|---|---|---|

| 51. Amotz Zahavi's handicap principle | 1123 | 02:56 | |

| 52. Struggling with Amotz Zahavi's verbal model of 'stotting' | 1154 | 03:23 | |

| 53. How Alan Grafen proves Zahavi's Handicap principle in sexual... | 1204 | 04:18 | |

| 54. My explanation of the Sir Philip Sidney game | 792 | 03:42 | |

| 55. An example of a cost-free signal in Drosophila... | 671 | 01:22 | |

| 56. Is sematics in science important enough to argue about? | 778 | 01:24 | |

| 57. Arguing over the use of words in science | 716 | 02:28 | |

| 58. Can sociology and biology mix? | 1096 | 03:46 | |

| 59. The extreme reaction of EO Wilson's colleagues | 1 | 1246 | 00:54 |

| 60. The Sociobiology controversy: The Marxist reaction | 1072 | 01:03 |

I came up with something called the Philip Sidney Game, which I'm rather proud of. The... the Philip Sidney game is all about Sir Philip Sidney, who, you'll remember is an English poet on a battlefield in Holland, and he's wounded and is lying wounded on the battlefield, and lying next door to him is a wounded soldier. And the story has it that he handed his water bottle to the soldier, saying, 'Thy necessity is yet greater than mine.' Now, this was after this... actually, I think they both died, so I can't see that it did them much good, but that's not in my model. My model is this, that Sir Philip Sidney can either give the water bottle to the soldier or not give it. Now, the soldier can either be really in a bad way, so that if he doesn't get the water bottle, he'll die, or he can be sort of thirsty, he'd like the water bottle, but not... it's not real serious, he'd got a decent chance of surviving if he doesn't get it. The question is, can there be an honest signalling between the soldier and Sir Philip Sidney, such that if the soldier is really in a bad way, he can make a signal saying, 'Look, I'm dying, give me the water bottle,' but he doesn't give that signal if he's not really in a bad way. And at first sight, you'd say, well, look, this whole thing is absurd because there's no way, in an evolutionary context, Sir Philip Sidney is going to give the water bottle, how is his fitness going to be increased by giving the water bottle? In a Darwinian context, there's got to be something in it for the... for the donor. And what I haven't... this is really borrowing an idea from Bill Hamilton, you see, what I didn't tell you was that the soldier is actually Sir Philip Sidney's brother, and Sir Philip Sidney has a stake in the survival of the soldier. And you can set this up all really quite simply, and it turns out to be, you know, quite simple to do the algebra. And it also turns out that in order... there are some contexts in which it turns out that before the signalling can be honest, so that only really soldiers in a really bad way make the signal, in order for that to be true, it has to be true that making the signal is expensive. It's costly, exactly in Zahavi's sense. So, you can make a model, which is... it's a much simplified version of what Alan [Grafen] is saying, and it works. However... and that was really not adding anything except providing a very, very simple model which didn't involve you having to be able to do hard calculus in order to be able to understand it. But something sort of fell out of the model which is sort of obvious, but I hadn't actually thought about it until I did the model; which is that there's a whole range of circumstances in which actually cost-free signals can be... can be reliable. It isn't always true that signals have to be expensive in order to be honest. And, in fact, there are lots of contexts in which there isn't a conflict of interest between the soldier and... and the... and Sir Philip Sidney, that in those contexts when it would pay Sir Philip Sidney to give the water bottle, it would pay the soldier to signal. If it doesn't pay the soldier to signal, it doesn't pay Sir Philip Sidney to give it. There's no conflict of interest between signaller and the receiver.

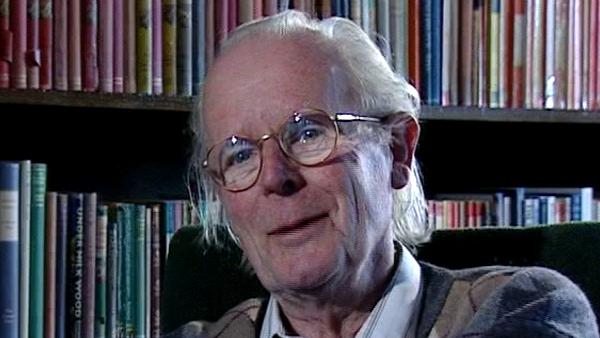

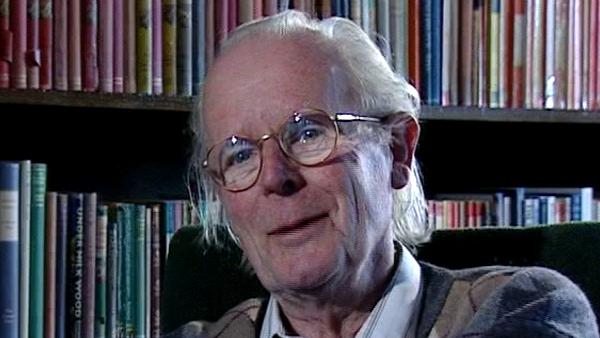

The late British biologist John Maynard Smith (1920-2004) is famous for applying game theory to the study of natural selection. At Eton College, inspired by the work of old Etonian JBS Haldane, Maynard Smith developed an interest in Darwinian evolutionary theory and mathematics. Then he entered University College London (UCL) to study fruit fly genetics under Haldane. In 1973 Maynard Smith formalised a central concept in game theory called the evolutionarily stable strategy (ESS). His ideas, presented in books such as 'Evolution and the Theory of Games', were enormously influential and led to a more rigorous scientific analysis and understanding of interactions between living things.

Title: My explanation of the Sir Philip Sidney game

Listeners: Richard Dawkins

Richard Dawkins was educated at Oxford University and has taught zoology at the universities of California and Oxford. He is a fellow of New College, Oxford and the Charles Simonyi Professor of the Public Understanding of Science at Oxford University. Dawkins is one of the leading thinkers in modern evolutionary biology. He is also one of the best read and most popular writers on the subject: his books about evolution and science include "The Selfish Gene", "The Extended Phenotype", "The Blind Watchmaker", "River Out of Eden", "Climbing Mount Improbable", and most recently, "Unweaving the Rainbow".

Tags: Sir Philip Sidney, WD Hamilton, Amotz Zahavi

Duration: 3 minutes, 42 seconds

Date story recorded: April 1997

Date story went live: 24 January 2008