NEXT STORY

Embarrassing mistakes in perceptron research

RELATED STORIES

NEXT STORY

Embarrassing mistakes in perceptron research

RELATED STORIES

|

Views | Duration | |

|---|---|---|---|

| 121. The problem with perceptrons | 1485 | 02:08 | |

| 122. Embarrassing mistakes in perceptron research | 1613 | 02:47 | |

| 123. Why we should publish failures in AI research | 1249 | 01:09 | |

| 124. Claude Shannon's world changing publication | 1 | 1525 | 01:37 |

| 125. Why I got on so well with Claude Shannon | 1724 | 02:09 | |

| 126. Making a ball bearing weapon | 1238 | 03:27 | |

| 127. Making the most useless machine | 8549 | 01:11 | |

| 128. A short history of chess playing machines | 1525 | 03:23 | |

| 129. Did the chess playing machines have an impact? | 1259 | 01:34 | |

| 130. The influence of Nicholas Rashevsky's mathematical biophysics | 1216 | 01:41 |

But one thing that they couldn’t learn was to count the number of objects in a picture. It's… just turned out that that was... because of rather obscure mathematical features of the concept of counting, that a reinforcement neural network of the type that we… many people were interested in just couldn’t do that. In order to make a machine that can count, you need a machine that has internal loops inside of circular causality and you can’t make a machine that just passes signals from one layer to another, processing them in a kind of linear form. Well, it took us several years to prove that for certain neural networks and… although we published all of this around 1970, there’s still some more things to prove about it. And for some reason, nobody has developed... this was a field called perceptrons. And some rumor started to spread that by reinforcing the machine in a slightly different way, it could overcome these limitations. So, the whole world of neural network people seem to believe that this problem has been escaped and that we were wrong in saying that there was no way these machines could learn that. And because of this false rumor, there hasn’t been any further progress in understanding the limitations of neural networks with many layers, but without loops. So, one reason I’m annoyed at my colleagues in… in this particular field of psychological theories is that they believe these rumors without actually looking at the original problem and seeing that it’s still there.

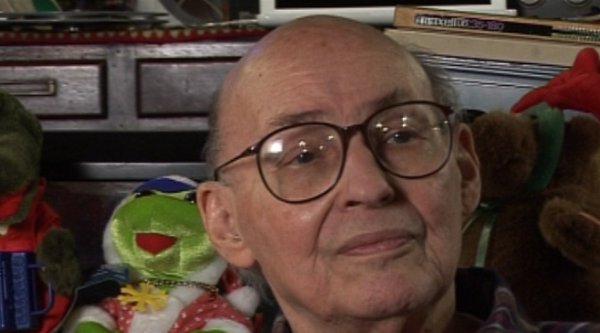

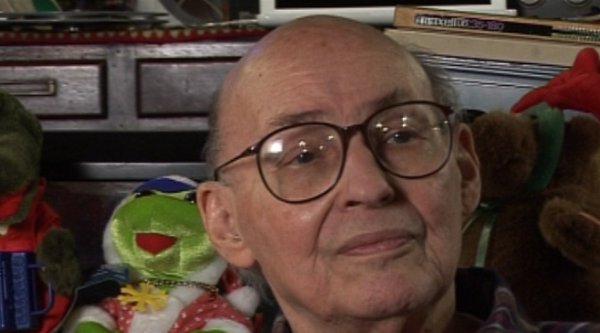

Marvin Minsky (1927-2016) was one of the pioneers of the field of Artificial Intelligence, founding the MIT AI lab in 1970. He also made many contributions to the fields of mathematics, cognitive psychology, robotics, optics and computational linguistics. Since the 1950s, he had been attempting to define and explain human cognition, the ideas of which can be found in his two books, The Emotion Machine and The Society of Mind. His many inventions include the first confocal scanning microscope, the first neural network simulator (SNARC) and the first LOGO 'turtle'.

Title: The problem with perceptrons

Listeners: Christopher Sykes

Christopher Sykes is a London-based television producer and director who has made a number of documentary films for BBC TV, Channel 4 and PBS.

Tags: 1970s

Duration: 2 minutes, 9 seconds

Date story recorded: 29-31 Jan 2011

Date story went live: 13 May 2011